Controlling a robot arm with your mind? Even a monkey can do it

In our previous articles we already described a few interfaces meant to connect our thoughts to machines such as Emotiv EPOC or Honda BMI. Back in May of 2008 experiments were conducted by Dr. Schwartz, a professor of neurobiology at the University of Pittsburgh, to teach a monkey to feed itself by using its thoughts in order to control a four-degrees-of-freedom robotic arm with shoulder joints, elbow, and a simple gripper.

In our previous articles we already described a few interfaces meant to connect our thoughts to machines such as Emotiv EPOC or Honda BMI. Back in May of 2008 experiments were conducted by Dr. Schwartz, a professor of neurobiology at the University of Pittsburgh, to teach a monkey to feed itself by using its thoughts in order to control a four-degrees-of-freedom robotic arm with shoulder joints, elbow, and a simple gripper.

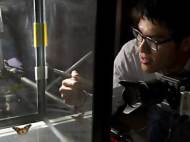

In the first video bellow you can see how monkeys in the Dr. Schwartz’s lab are able to move a robotic arm to feed themselves marshmallows and chunks of fruit while their own arms are restrained. He and his fellow researchers from the Motorlab at the University of Pittsburgh have recently demonstrated a progress in the research where a monkey was able to use its thoughts to control a more sophisticated robotic arm.

In the experiments conducted this year two sensors were implanted into the monkey’s brain. One was implanted in the hand area and the second in the arm area of its motor cortex. The sensors monitor the firing of motor neurons and send data to the computer that translates the patterns into commands that control the robotic arm.

In the video bellow, the monkey uses its right arm to tap a button which triggers the robotic manipulator to position a black knob to an arbitrary position. The monkey is then seen controlling its articulated robotic arm to grasp the knob. After touching the knob the monkey places its mouth on a straw to be rewarded with a drink. By constant repetition the monkey eventually starts placing its mouth on the straw before touching the knob knowing that a drink is coming.

The advanced robotic arm has seven-degrees-of-freedom. The added three more degrees of freedom enable use of an articulated wrist which can perform pitch, roll and yaw movements. These movements enable the monkey to precisely turn the knob by rotating the mechanical wrist.

By putting the brain in direct communications with machines, researchers will one day be able to engineer and operate advanced prosthetics in a natural way to help paralyzed people live a close to normal life. However, we’re currently not too close to the realization of such ideas, since we need to find less invasive ways to read the information, as well as better algorithms and knowledge to analyze the gathered data.

Mojo JoJo from Powerpuff Girls? ^_^