ASIMO can recognize three speakers simultaneously

In one of our previous articles we started to cover the features of ASIMO – one of the most advanced humanoid robots of today. Besides footsteps planning, ASIMO can understand three humans speaking simultaneously (to some degree). Hiroshi Okuno at Kyoto University, and Kazuhiro Nakadai at the Honda Research Institute in Saitama, both in Japan, have designed the software, which they call HARK. Okuno and Nakadai claim it can follow three speakers simultaneously with 70 to 80 percent accuracy when installed into Honda’s ASIMO robot.

In one of our previous articles we started to cover the features of ASIMO – one of the most advanced humanoid robots of today. Besides footsteps planning, ASIMO can understand three humans speaking simultaneously (to some degree). Hiroshi Okuno at Kyoto University, and Kazuhiro Nakadai at the Honda Research Institute in Saitama, both in Japan, have designed the software, which they call HARK. Okuno and Nakadai claim it can follow three speakers simultaneously with 70 to 80 percent accuracy when installed into Honda’s ASIMO robot.

HARK uses an array of eight microphones to work out where each voice is coming from and isolate it from other sound sources. The software first processes it by determining how reliably it has extracted an individual voice, before passing it onto speech-recognition software to decode. That quality control step is important. The other voices are likely to confuse speech recognition software. So any parts of the sound file that contain a lot of background noise across a range of frequencies are automatically ignored when the patched-up recording of each voice is passed on to a speech-recognition system.

The HARK system actually goes beyond normal human listening capabilities, because it can listen to several sources at once, and not just focus on a particular single sound source. While focusing on a single voice among many is known as the “cocktail party effect“, Okuno calls the ability to focus on multiple voices at once the “Prince Shotoku Effect”. According to Japanese legend, Prince Shotoku listened to 10 people’s petitions at the same time.

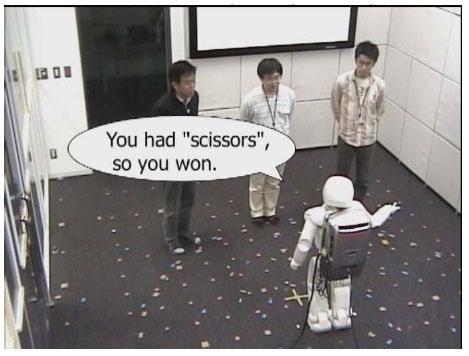

In their demonstration, modified ASIMO’s ability is being used to judge the vocal variation of rock-paper-scissors game, where three people call out their choices at once. It might seem simple, but the number of voices and the complexity of the sentences the software can deal with should grow in future. The array of eight microphones is placed around the ASIMO’s face and body, helping the software to accurately detect and isolate simultaneous voices. “The number of sound sources and their directions are not given to the system in advance,” says Nakadai.

Rock-paper-scissors uses a small vocabulary, making the task easier. When Okuno and Nakadai tried using their software to follow several complicated sentences at once, as three people shouted out restaurant orders, it could only identify 30 to 40 percent of what was said. Before we can match the performance of human listeners in ‘cocktail party’ situations.

Leave your response!