Cody – mobile manipulating robot could aid us in future

One of the main subjects of our website is covering stories about robots made to aid us. In this article we’re going to write about the humanoid robot named Cody. Cody comes from Georgia Tech’s Healthcare Robotics Lab (the lab which also came up with another aiding robot we already wrote about named El-E). Aside the difference in design, this robot is capable to be controlled in its own unique ways.

One of the main subjects of our website is covering stories about robots made to aid us. In this article we’re going to write about the humanoid robot named Cody. Cody comes from Georgia Tech’s Healthcare Robotics Lab (the lab which also came up with another aiding robot we already wrote about named El-E). Aside the difference in design, this robot is capable to be controlled in its own unique ways.

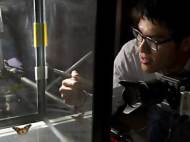

The robot is a statically stable mobile manipulator. The components of the robot are: arms from MEKA Robotics (MEKA A1), a Segway omni-directional base (RMP 50 Omni), and a 1 degree-of-freedom (DoF) Festo linear actuator. The arms consist of two 7-DoF anthropomorphic arms with series elastic actuators (SEAs) and the robot’s wrists are equipped with 6-axis force/torque sensors (ATI Mini40).

It can open doors, drawers, and cabinets using equilibrium point controllers developed by Advait Jain and Prof. Charlie Kemp. It also has a nice direct physical interface (touching interface) to reposition the robot that was developed by Tiffany Chen and Prof. Charlie Kemp. Much of the code controlling this robot is open-source and has ROS (Robot Operating System) interfaces.

Advait Jain and Prof. Charlie Kemp have built Equilibrium Point Controllers (EPC) to control the low mechanical impedance arms and perform door, drawer, and cabinet opening. In general, EPCs are simulated, torsional, viscoelastic springs (with constant stiffness and damping) and a variable equilibrium angle. These controllers seem to vastly simplify robot behavior creation, and work with very low refresh rates (something around 10Hz). You can learn more in the paper they published: Pulling Open Doors and Drawers: Coordinating an Omni-directional Base and a Compliant Arm with Equilibrium Point Control (PDF).

One of the interfaces, developed by Tiffany Chen and advisor Prof. Charlie Kemp, is a typical gamepad (PlayStation-like) interface, while the other is a novel Direct Physical Interface (DPI). In a user study of 18 nurses from the Atlanta, GA area, we showed that this DPI is superior to a comparable gamepad interface according to several objective and subjective measures. The direct physical (touching) interface is chosen over the gamepad interface because it is much more intuitive.

You grab hold of the robot and reposition it as you would while you dance or guide someone by the hand or arm. Force sensor readings and end effector positions are measured and used to compute forward/backward and angular velocities of the robot’s base. The user can also grab the robot’s highly compliant arms and either push them toward or pull them away from the robot to create changes in the angles of the robot’s shoulder joints. These angles are measured and used to compute direct side-to-side velocities of the robot’s omni-directional base. You can learn more from the paper they published: Lead Me by the Hand: Evaluation of a Direct Physical Interface for Nursing Assistant Robots (PDF).

The robot still needs a lot of software development in order to make it more independent on user input, as well as better manipulators (some sort of agile robotic hands) in order to make it more applicable in real-life situations.

Leave your response!