Cognitive Universal Body – iCub project

As it is clear that mental processes are significantly shaped by the physical structure of the body and by its interaction with the environment, European Commission is funding a 5 years long opened project related to that issue since 2005. Named RobotCub, the project aims to move this research agenda forward by providing a humanoid toddler platform which can be used to explore and study cognition.

As it is clear that mental processes are significantly shaped by the physical structure of the body and by its interaction with the environment, European Commission is funding a 5 years long opened project related to that issue since 2005. Named RobotCub, the project aims to move this research agenda forward by providing a humanoid toddler platform which can be used to explore and study cognition.

Funded under the Information Society Technologies (IST) priority of the Sixth Framework Programme (FP6), RobotCub brings together 11 European research centers, two research centers in the US and three in Japan for a five year period. The partners are either specialized in robotics, neuroscience or developmental psychology.

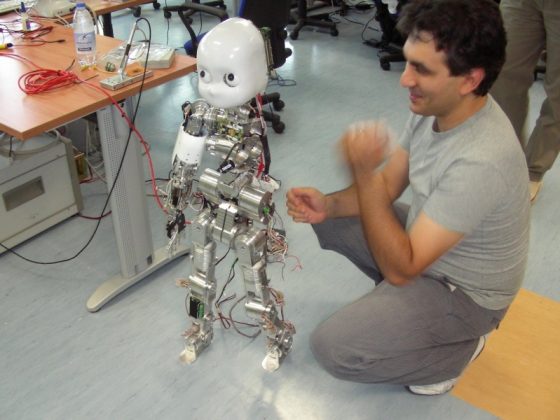

iCub is a humanoid robot the size of a 3.5 year old child which is 100cm tall and weighs 23kg. It has mechanical joints that enable it to move its head, arms, fingers, eyes and legs similarly to the way that humans do because it’s believed that cognition is very much tied up with the way we interact with the world.

This is an open project in many different ways: the platform is distributed openly, software is developed as open-source, and the project is opened for new partners and form collaboration worldwide.

The team links a computer simulation of a brain to iCub so that it can process information about its environment and send bursts of electrical energy to its motors to allow it to move its arms, head, eyes and fingers to carry out very simple tasks such as lifting a ball and moving it from one place to another.

By now, iCub is able to crawl and walk, make human-like eye and head movements and recognize and grasp objects like a toddler.

A consortium led by the University of Plymouth, a world leader in cognitive robotics research, beat competition from 31 others to win a 5.3m euros grant for the development ofItalk – Integration and Transfer of Action and Language Knowledge in Robots – a project which will enable little iCub to learn to speak.

In the long term, the researches believe their study could help develop a new generation of intelligent factory robots that have much more usefulness. Other great uses would be adjusting personal robots to understand and adjust according to their owner behaviors and habits.

After being instructed how to hold the bow and release the arrow, iCub robot learns by itself to aim and shoot arrows at the target. It learns to hit the center of the target in only 8 trials. The learning algorithm, called ARCHER (Augmented Reward Chained Regression) algorithm, was developed and optimized by the same folks who came up with a pancake flipping robot we described in one of our previous articles.

ARCHER uses a chained local regression process that iteratively estimates new policy parameters which have a greater probability of leading to the achievement of the goal of the task, based on the experience so far. An advantage of ARCHER over other learning algorithms is that it makes use of richer feedback information about the result of a rollout.

For the archery training, the ARCHER algorithm is used to modulate and coordinate the motion of the two hands, while an inverse kinematics controller is used for the motion of the arms. After every rollout, the image processing part recognizes automatically where the arrow hits the target which is then sent as feedback to the ARCHER algorithm. The image recognition is based on Gaussian Mixture Models for color-based detection of the target and the arrow’s tip.

The experiments are performed on a robot described in the article above. The distance between the robot and the target is 3.5m (11.5 feet). You can watch the experiment in the following video:

http://www.youtube.com/watch?v=QCXvAqIDpIw