Mobile, Dexterous, Social Robot – Nexi

Personal robots are an emerging technology with the potential to have a significant positive impact across broad applications in the public sector including eldercare, healthcare, education, and beyond. MDS (Mobile Dexterous Social) Robot is designed and built within the collaboration of the MIT Media Lab’s Personal Robots Group, UMASS Amherst’s Laboratory for Perceptual Robotics, Xitome Design and Meka Robotics.

Personal robots are an emerging technology with the potential to have a significant positive impact across broad applications in the public sector including eldercare, healthcare, education, and beyond. MDS (Mobile Dexterous Social) Robot is designed and built within the collaboration of the MIT Media Lab’s Personal Robots Group, UMASS Amherst’s Laboratory for Perceptual Robotics, Xitome Design and Meka Robotics.

The main chassis of the robot is based on the uBot5 mobile manipulator developed by the Laboratory for Perceptual Robotics UMASS Amherst (directed by Rod Grupen). The mobile base is a dynamically balancing platform (akin to a miniature robotic Segway base).

The two 4-degrees of freedom (DoF) arms are based on a series elastic DOMO/WAM style arm design with force sensing to support position and/or force control. These arms are designed to be able to pick up a object which weights 4.5kg. The arm length permits a large bimanual workspace on the ground plane. The arms are sufficiently capable to support interesting research in mobile dexterity and group manipulation tasks such as having several MDS robots carry a large object together. The shoulder chassis sits above a torso “twist” degree of freedom.

The robots can run on either tethered power or using on-board Li-Ion batteries. An on-board DSP and FPGA support low level motor control, and an embedded PC running Linux OS with wireless communication is used for dynamic balancing of the base and force control of the arms. A small indoor laser range finder supports navigation and obstacle avoidance. Capacitive sensors in the white cosmetic shells are used to sense human contact. Other wireless embedded PCs support the robot’s sensory systems (visual, auditory, range, and tactile).

The 5 DoF lower arm and hands are developed by Meka, Inc. with MIT. The lower arm has forearm roll and wrist flexion. Each hand has three fingers and an opposable thumb. The thumb and index finger are controlled independently and the remaining two fingers are coupled. A slip clutch in the wrist and shape deposition manufacturing techniques for the fingers were used to make the system ruggedized to falls and collisions. The fingers compliantly close around an object when flexed, allowing for simple gripping and hand gestures.

The expressive head and face are designed by Xitome Design with MIT. The neck mechanism has 4 DoFs to support a lower bending at the base of the neck as well as pan-tilt-yaw of the head. The head can move at human-like speeds to support human head gestures such as nodding, shaking, and orienting.

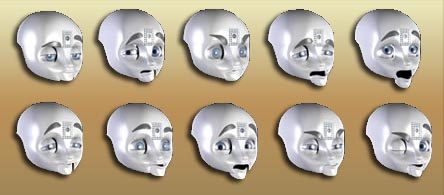

The 15 DoF face has several facial features to support a diverse range of facial expressions including gaze, eyebrows, eyelids and an articulate mandible for expressive posturing. Perceptual inputs include a color CCD camera in each eye, an indoor Active 3D IR camera in the head, four microphones to support sound localization, a wearable microphone for speech. A speaker supports speech synthesis.

Although tremendous advances have been made in machine learning theories and techniques, existing frameworks do not adequately consider the human factors involved in developing robots that learn from people who lack particular technical expertise but bring a lifetime of experience in learning socially with others. The creators of MDS refer to this area of inquiry as Socially Situated Robot Learning (SSRL).

That would make social robots able to successfully learn what matters to the average citizen from the kinds of interactions that people naturally offer and over the long-term.

Leave your response!