Robot butler HERB gets improved object recognition

As most researchers who work with computer vision know, it can be troublesome to discover objects in its surroundings. Researchers at Carnegie Mellon University’s Robotics Institute introduced properties such as object’s location, size, shape and even whether it can be lifted in order to enable a robot to continually discover and refine its understanding of various objects.

As most researchers who work with computer vision know, it can be troublesome to discover objects in its surroundings. Researchers at Carnegie Mellon University’s Robotics Institute introduced properties such as object’s location, size, shape and even whether it can be lifted in order to enable a robot to continually discover and refine its understanding of various objects.

Named the Lifelong Robotic Object Discovery (LROD), process developed by the research team enables a two-armed, mobile robot to combine information gathered by color video, a Kinect depth camera and non-visual information to discover more than 100 objects in laboratory equipped to look as a home. The recognition includes items such as computer monitors, plants and food.

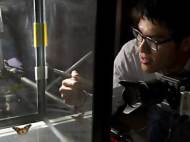

CMU researchers used their Home-Exploring Robot Butler as a platform for LROD and devised HerbDisc algorithm which gradually refines its models of the objects and begins to focus its attention on those that are most relevant to the search.

Siddhartha Srinivasa, associate professor of robotics and head of the Personal Robotics Lab, who jointly supervised the research with Martial Hebert, professor of robotics. Alvaro Collet, a scientist at Microsoft who was a robotics Ph.D. student they co-advised, led the development of HerbDisc. A vcombination of expertise from these researchers led to right use of HERB’s abilities.

Discovering and understanding objects in places filled with hundreds or thousands of things will be a crucial capability once robots become more widely spread as assistants at our homes and workplaces.

Depth measurements from HERB’s Kinect sensors proved to be particularly important because they provide 3D data which defines shape of household items. Other domain knowledge available to HERB includes location – whether something is on a table, on the floor or in a cupboard. The robot can see whether a potential object moves on its own, or is moveable at all.

While our definition of an object isn’t related to the fact is it liftable, HERB’s definition of an object is oriented toward its function as an assistive device for people. The robot can note whether something is in a particular place at a particular time. And it can use its arms to see if it can lift the object – the ultimate test whether it is an object.

The CMU team found that adding domain knowledge to the video input almost tripled the number of objects HERB could discover and reduced computer processing time by a factor of 190.

Though not yet implemented, HERB and other robots could use the Internet to create an even richer understanding of objects. Earlier work by Srinivasa showed that robots can use crowdsourcing via Amazon Mechanical Turk to help understand objects. Likewise, a robot might access information sharing sites such as previously described RoboEarth to find the name of an object, as well as data related to properties of an object or to get images of parts of the object it can’t find.

For more information, you can read the paper: “Exploiting Domain Knowledge for Object Discovery” [3MB PDF].

Although I like the effort, it doesn’t appear to be precise enough.

I wonder why haven’t they used a technology which allows them more precise 3D scanning.