Robot co-active learning adjusts to context-driven user preferences

Researchers at the Cornell University created an algorithm which enables robots to “coactively learn” from humans and make adjustments while an action is in progress. Their approach relies on a combination of machine learning, object and user association, and trajectory adjustment could allow robots to operate more reliably when it comes to object manipulation and safety during human-robot interaction.

Researchers at the Cornell University created an algorithm which enables robots to “coactively learn” from humans and make adjustments while an action is in progress. Their approach relies on a combination of machine learning, object and user association, and trajectory adjustment could allow robots to operate more reliably when it comes to object manipulation and safety during human-robot interaction.

Assembly lines relying on robots usually have many marks and warnings in assembly areas to increase the safety of people that might be inside them while robots are working. However, most of these robots perform their tasks in memorized routines and accidents do occur. Aside safety, robots being developed to serve as aid in our homes have another set of problems, ranging from handling objects according to their properties, to safety when it comes to their movement or tool handling.

“We give the robot a lot of flexibility in learning”, said Ashutosh Saxena, assistant professor of computer science. “The robot can learn from corrective human feedback in order to plan its actions that are suitable to the environment and the objects present.”

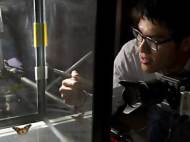

The researchers used their algorithm on a Baxter robot from Rethink Robotics. Baxter robot is already smarter than an average industrial robot because it has sensors in its hands and around its arms allowing it to be able to adapt to its surroundings. If Baxter senses it is about to hit something, it slows down its movement to reduce the force before the impact.

Sensors surrounding robot’s head allow it to sense people nearby, and adapt to environment, unlike other industrial robots which will either continue to do their one task repeatedly or shut down when their sensors detect a change in their environment.

Baxter’s arms have two elbows and a rotating wrist, so it’s not always obvious to a human operator how best to move the arms to accomplish a particular task. So Saxena and graduate student Ashesh Jain drew on previous work related to object handling and added programming that lets the robot plan its own motions.

They researchers built a mock-supermarket checkout line where robot learns how to handle objects. Baxter displays three possible trajectories on a touch screen where the operator can select the one that looks most suitable. If needed, humans can also give corrective feedback.

As the robot executes its movements, the operator can intervene during “zero-G” mode, manually guiding the arms to fine-tune the trajectory. In zero-G mode, the robot’s arms hold their position against gravity but allow the operator to move them. The coactive learning algorithm the researchers provided allows the robot to learn incrementally, refining its trajectory a little more each time the human operator makes adjustments or selects a trajectory on the touch screen. Even with weak but incrementally correct feedback from the user, the robot gradually finds out how to perform optimal movement.

The robot learns to associate a particular trajectory with each type of object. A quick flip over might be the fastest way to move a cereal box, but that wouldn’t work with a carton of eggs. Also, since eggs are fragile, the robot is taught that they shouldn’t be lifted far above the counter. As you can see in the video above, the robot learns how to handle with knives.

In tests with users who were not part of the Cornell research team, most users were able to train the robot successfully on a particular task with just five corrective feedbacks. The robot was also able to generalize what it learned, adjusting when the object, the environment or both were changed.

For more information, you can read the early version of the research paper: “Learning Trajectory Preferences for Manipulators via Iterative Improvement” [2.03MB PDF].

Leave your response!