Vision-based obstacle avoidance for autonomous MAVs

Development of small flying machines able to patrol recon or search and rescue missions has been around for a while now. While small flying machines which use GPS in order to find their way to their destination are already common, there is a need for development of other systems which enable maneuvering around obstacles in situations when or where GPS isn’t available. Researchers from Cornell University recently presented a solution which could be employed for autonomous flying robots.

Development of small flying machines able to patrol recon or search and rescue missions has been around for a while now. While small flying machines which use GPS in order to find their way to their destination are already common, there is a need for development of other systems which enable maneuvering around obstacles in situations when or where GPS isn’t available. Researchers from Cornell University recently presented a solution which could be employed for autonomous flying robots.

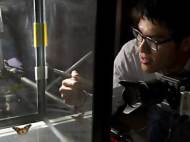

Led by Ashutosh Saxena, assistant professor of computer science at Cornell, the research team focused to solve the problem related to robot’s perception in situations where human operators don’t react fast enough to avoid an obstacle or when radio signals may not reach everywhere the robot flies. Saxena and his team have already programmed flying robots to navigate hallways and stairwells, however, the implementation of those methods in the wild aren’t accurate enough at large distances to plan a route around obstacles.

Saxena is building on methods he previously devised inspired by the same principles humans unconsciously use to supplement their stereoscopic vision. He used the same principle to turn a flat video camera image into a 3D model of the environment using data such as converging straight lines and the apparent size of familiar objects and their relation to each other.

Graduate students Ian Lenz and Mevlana Gemici trained the robot with 3D pictures of obstacles commonly found in nature – obstacles such as tree branches, poles, fences and buildings – and it was taught about the properties of those objects, as well as their relation to each other. The resulting set of rules for deciding what is an obstacle is burned into a chip before the robot flies. Once in flight, the robot fragments the recorded 3D image of its environment into small chunks based on obvious boundaries. These fragments are analyzed and the robot computes a path through them while constantly making adjustments as the view changes.

In their tests, the researchers used a quadrotor which was trained and tested in 53 autonomous flights in obstacle-rich environments. The results are promising since its machine learning enabled it to succeeded in 51 cases, while it failed twice due to influence of the wind. Cornell researchers plan to improve the robot’s response to environment variations such as winds, and enable it to detect and avoid moving objects (such as real birds) by testing the system in situations where people throw tennis balls at the flying vehicle.

For more information, you can read the paper: “Low-Power Parallel Algorithms for Single Image based Obstacle Avoidance in Aerial Robots” [2.91MB PDF].

Very interesting development since it’s able to simplify the process of object recognition in a quick way.

Kudos to involved Cornell researchers.