A step toward mind reading of the moving images in our brains

Scientists at the University of California, Berkeley, are combining brain imaging and computer simulation in order to read the mind and reconstruct people’s dynamic visual experiences. They hope that their research this way of “mind reading” will pave the way for future technologies that could reproduce the moving images in our heads, thus bringing our dreams and memories on a screen.

Scientists at the University of California, Berkeley, are combining brain imaging and computer simulation in order to read the mind and reconstruct people’s dynamic visual experiences. They hope that their research this way of “mind reading” will pave the way for future technologies that could reproduce the moving images in our heads, thus bringing our dreams and memories on a screen.

“This is a major leap toward reconstructing internal imagery”, said Professor Jack Gallant, a UC Berkeley neuroscientist. “We are opening a window into the movies in our minds.”

In their previous research, Gallant and his colleagues used functional Magnetic Resonance Imaging (fMRI) to record brain activity in the visual cortex while a subject was viewing black-and-white photographs. During that research they were able to develop an accurate computational model which was used to which picture the subject was looking at. Current research is taking this a step further by decoding brain signals generated by moving pictures.

“Our natural visual experience is like watching a movie”, said Shinji Nishimoto, a post-doctoral researcher in Gallant’s lab and lead author of the study. “In order for this technology to have wide applicability, we must understand how the brain processes these dynamic visual experiences.”

In the experiments, Nishimoto and two other research team members served as subjects because the procedure requires volunteers to remain still inside the MRI scanner for hours at a time. The fMRI was used to measure blood flow through the visual cortex, the part of the brain that processes visual information. The recorded data of the brain was divided in small voxels (volumetric pixels) – 3D cubes which are analogous to pixels in 2D. The brain activity recorded while subjects viewed the first set of Hollywood movie trailers was fed into a computer program that learned to associate visual patterns in the movie with the corresponding brain activity.

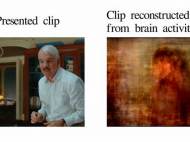

In the following experiments, brain activity evoked by the second set of clips was used to test the movie reconstruction algorithm. The computer algorithm was previously fed by 18 million seconds of random YouTube videos in order to have a set of data used to predict the brain activity when a clip is played to a subject. In the end, the 100 clips that the computer algorithm found to be most similar to the clip that the subject had probably seen were merged to produce a blurry reconstruction of the original movie.

Reconstructing movies using brain scans has been challenging because the blood flow signals measured with fMRI change significantly slower compare to the neural signals that encode dynamic information in movies.

“We need to know how the brain works in naturalistic conditions. For that, we need to first understand how the brain works while we are watching movies”, said Nishimoto. “We addressed this problem by developing a two-stage model that separately describes the underlying neural population and blood flow signals”.

Eventually, practical applications of the technology could include a better understanding of what goes on in the minds of people who cannot communicate verbally, such as stroke victims, coma patients and people with neurodegenerative diseases. However, researchers point out that the technology is decades from allowing users to read others’ thoughts and intentions.

For more information, read the article published in the journal Current Biology named: “Reconstructing Visual Experiences from Brain Activity Evoked by Natural Movies”.

Leave your response!