MIT’s BiDi screen enables gestural control of on-screen objects

Most of current touch screen displays uses capacitive sensing, where the proximity of a finger disrupts the electrical connection between sensors in the screen. A competing approach, which uses embedded optical sensors to track the movement of the user’s fingers, is just now coming to market. The researchers at MIT’s Media Lab went a step further by using such sensors in order to turn displays into giant lensless cameras. Matthew Hirsch, a PhD candidate at the Media Lab who, along with Media Lab professors Ramesh Raskar and Henry Holtzman and visiting researcher Douglas Lanman, developed the new BiDi Screen – a thin, depth-sensing LCD for 3D interaction which uses light fields.

Most of current touch screen displays uses capacitive sensing, where the proximity of a finger disrupts the electrical connection between sensors in the screen. A competing approach, which uses embedded optical sensors to track the movement of the user’s fingers, is just now coming to market. The researchers at MIT’s Media Lab went a step further by using such sensors in order to turn displays into giant lensless cameras. Matthew Hirsch, a PhD candidate at the Media Lab who, along with Media Lab professors Ramesh Raskar and Henry Holtzman and visiting researcher Douglas Lanman, developed the new BiDi Screen – a thin, depth-sensing LCD for 3D interaction which uses light fields.

Some experimental systems (such as Microsoft’s Natal) use small cameras embedded in a display to capture information about gestures. But because the cameras are offset from the center of the screen, they don’t work well at short distances, thus they can’t provide a seamless transition needed in close range screen interactions. Cameras set far enough behind the screen can provide that transition, as they do in Microsoft’s SecondLight, but they add to the display’s thickness and require costly hardware to render the screen alternately transparent and opaque.

“The goal with this is to be able to incorporate the gestural display into a thin LCD device” — like a cell phone — “and to be able to do it without wearing gloves or anything like that,” Hirsch says.

The simplest way to explain how the system works, Lanman says, is to imagine that, instead of an LCD, an array of pinholes is placed in front of the sensors. Light passing through each pinhole will strike a small block of sensors, producing a low-resolution image. Since each pinhole image is taken from a slightly different position, all the images together provide a good deal of depth information about whatever lies before the screen. An array of liquid crystals could simulate a sheet of pinholes simply by displaying a pattern in which, say, the central pixel in each 19-by-19 block of pixels is white (transparent) while all the others are black.

The problem with pinholes, Lanman explains, is that they allow very little light to reach the sensors, so they require exposure times that are too long to be practical. So the LCD instead displays a pattern in which each 19-by-19 block is subdivided into a regular pattern of black-and-white rectangles of different sizes. Since there are as many white squares as black, the blocks pass much more light.

The 19-by-19 blocks are all adjacent to each other, however, so the images they pass to the sensors overlap in a confusing jumble. But the pattern of black-and-white squares allows the system to computationally disentangle the images, capturing the same depth information that a pinhole array would, but capturing it much more quickly.

The BiDi screen has several benefits over related techniques for imaging the space in front of a display. Chief among them is the ability to capture multiple orthographic images, with a potentially thin device, without blocking the backlight or portions of the display. Besides enabling lighting direction and depth measurements, these multi-view images support the creation of a true mirror, where the subject gazes into her own eyes, or a videoconferencing application in which the participants have direct eye contact, however, the limited resolution of the prototype does not produce imagery competitive with consumer webcams.

The BiDi screen requires separating the light-modulating and light-sensing layers, complicating the display design. In our prototype an additional 2.5 cm was added to the display thickness to allow the placement of the diffuser. In the future a large-format sensor could be accommodated within this distance, however the current prototype uses a pair of cameras placed about 1 m behind the diffuser – significantly increasing the device dimensions. Also, as the LCD is switched between display and capture modes, the proposed design will reduce the native frame rate. Image flicker will result unless the display frame rate remains above the flicker fusion threshold. Lastly, the BiDi screen requires external illumination, either from the room or a light-emitting widget, in order for its capture mode to function. Such external illumination reduces the displayed image contrast. This effect may be mitigated by applying an anti-reflective coating to the surface of the screen.

The Media Lab system requires an array of liquid crystals, as in an ordinary LCD display, with an array of optical sensors right behind it. The liquid crystals serve, in a sense, as a lens, displaying a black-and-white pattern that lets light through to the sensors. But that pattern alternates so rapidly with whatever the LCD is otherwise displaying that the viewer never notices it.

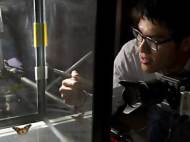

LCDs with built-in optical sensors are so new that the Media Lab researchers haven’t been able to procure any yet, but they mocked up a display in the lab to test their approach. Like some existing touch screen systems, the mockup uses a camera some distance from the screen to record the images that pass through the blocks of black-and-white squares. In experiments in the lab, the researchers showed that they could manipulate on-screen objects using hand gestures and move seamlessly between gestural control and ordinary touch screen interactions.

Leave your response!